Augmented Reality at the WTCE: How exactly does it work?

An interview with Martin Buchholtz (Innovation and Research, LSG Group) and Oliver Tappe (Global IT, LSG Group)

As recently reported here on our Blog, the LSG Group will use Augmented Reality (AR) at the WTCE. A wide variety of dishes will be presented virtually on high-quality tableware in order to illustrate its diverse fields of application. One example uses a plate. If you film it with a smartphone or tablet camera (having with right software), not only will the plate appear on the screen, it will also be a virtual full dish in 3D. The plate can then be examined from every possible angle, giving trade-fair guest a good idea of how the plate could be used in service. But how does it actually work?

ONE: Martin, Oliver, how does the virtual food actually get on the plate?

Martin: First of all, you have to define what is to be displayed on what. In our case, for example, it is food on a plate. Therefore, in addition to the exact dimensions of the plate, we also need an exact picture of the meal. This is the only way we can display an almost perfect 3D image of food on an empty plate. The trigger is a marker code on the plate. You can use the brand logo for this purpose, for example. If the camera or software can recognize this code, it will display the virtual food in the appropriate place.

ONE: Sounds quite simple. Is it or not?

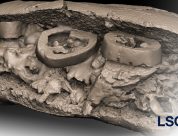

Oliver: Well, it takes a level of effort you shouldn’t underestimate. You need a perfect picture of the food from almost every angle. This is the only way you can achieve the 3D effect. So, the food has to be photographed with the most even lighting possible from 80 to 150 different angles. In our case, each of these images has a 40 megapixel resolution, which lets you see even the smallest detail. After you photograph the food, you have to feed the photo data into an above-average computer that has several graphics cards and special software. The computer then takes a few hours to calculate a 3D model from the individual pictures. The way this happens is that the software recognizes particularly prominent points on the image and then sees those same points on the next image and every subsequent one after that. As the software recognizes, or calculates, which picture was taken from which perspective, the 3D image begins to take shape. With enough high resolution and processing power, we can create a 3D model that has over one million polygons, simply through the angle changes between the images. The pictures create the textures for the model in order to represent the structure as realistically as possible, like the marbling of the meat for instance. During the last step, you have to rework the model manually. The background is removed, for example, to reduce the number of unneeded polygons. The result is a model that has about 50,000 to 70,000 polygons, which will still look very good when virtualized

and perform well enough to be calculated in real time on a tablet or smartphone.

ONE: And polygons are…

Oliver: They are angular elements. In fact, a 3D model is made up of a multitude of small polygons that form the whole. Due to the extreme detail of the image, however, it does not appear angular. So, the food looks like it is actually sitting in front of you.

ONE: Sounds exciting!

Martin: It is! We are very much looking forward to surprising our trade-fair guests with this presentation and getting into conversations. If you haven’t written it down yet, here it is again: we will be welcoming visitors from the 10th to the 12th of April at the WTCE in Hamburg, Hall A1, Stand 1E20.